Introduction to Generative AI - Andrew NG

- AI is a general purpose technology - it's useful for a lot of things. (Like electricity)

- Internet is another general purpose technology.

- Use as brainstorming partner

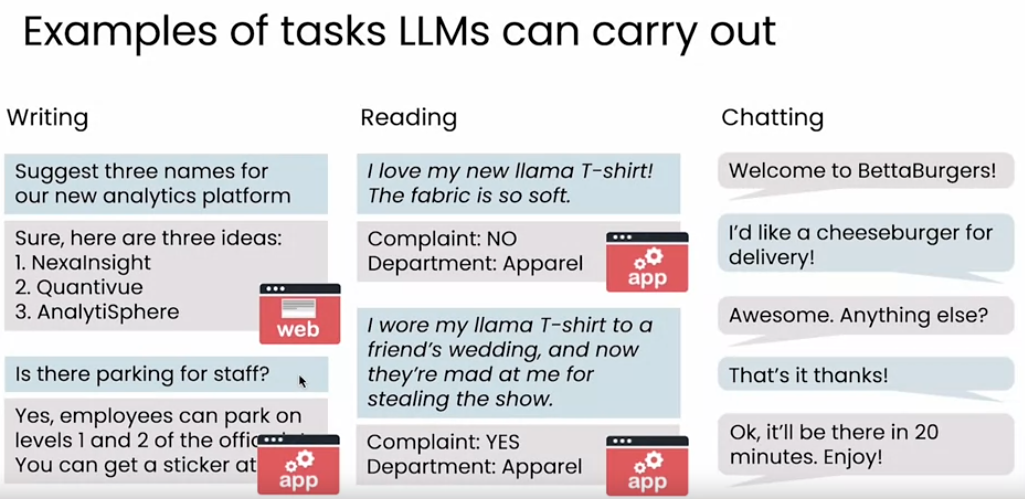

- For writing - example, write a press release.

- Translation - example translate the following into Hindi / formal Hindi / formal spoken Hindi

- Translate text into Pirate English for teseting purposes.

- Proofreading

- Summarizing a long article.

- Summarizing call centre conversations. (Record --> Run Phone calls --> Speech Recognition --> Long Test Transcripts --> LLM -- Summarise converstaion --> Generate short summary --> spot issues or trends (Manager)

- Specialized chatbots

- Customer service chatbot

- Trip planning chatbot

- Advise bots - career coaching, cooking a meal, etc.

- Some can ACT if sufficiently empowered.

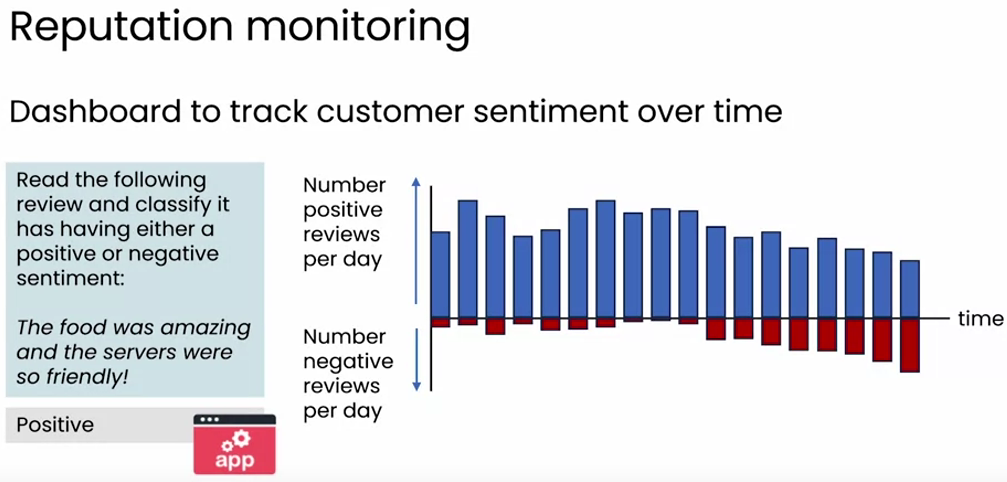

['The mochi is excellent!',

'Best soup dumplings I have ever eaten.',

'Not worth the 3 month wait for a reservation.',

'The colorful tablecloths made me smile!',

'The pasta was cold.']

3. Write a code to classify the above reviews as poistive or negative.

for review in all_reviews:

prompt = f'''

Classify the following review

as having either a positive or

negative sentiment. State your answer

as a single word, either "positive" or

"negative":

{review}

'''

response = llm_response(prompt)

all_sentiments.append(response)

all_sentiments

Output will be ['positive', 'positive', 'negative', 'positive', 'negative']

4. Write code to count the number of positive and number of negative reviews.

num_positive = 0

num_negative = 0

for sentiment in all_sentiments:

if sentiment == 'positive':

num_positive += 1

elif sentiment == 'negative':

num_negative += 1

print(f"There are {num_positive} positive and {num_negative} negative reviews.")

Output is: There are 3 positive and 2 negative reviews.

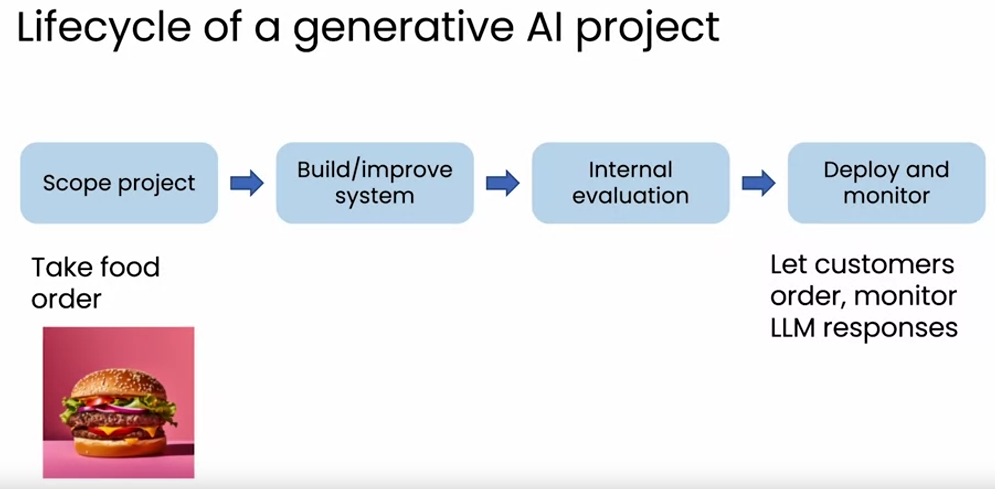

Lifecycle of a Generative AI ProjectThe above output is wrong because tonkotsu ramen is pork soup, and the above response is not considered positive.

Now feedback to the system to improve

Tools to improve performance- Prompting is a higly empirical process

- Idea -- Prompt -- LLM Response -- Tweak Idea and the cycle repeats

- RAG - Retrieval Augmented Generation (RAG)

- Give LLM access to external data sources.

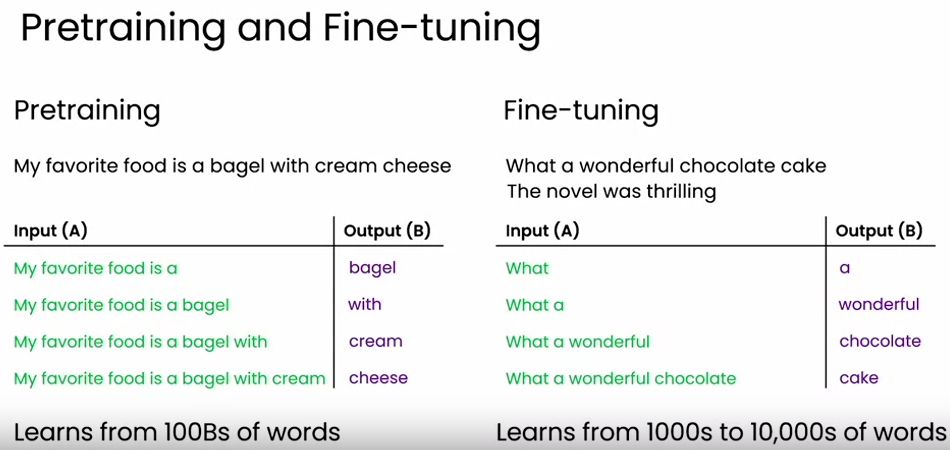

- Fine tune models

- Adapt LLMs to your task.

- Pretrain models

- Train LLMs from scratch

Above, where the calorific value is asked, you can go over to RAG.

How much do LLMs Cost?

A Token is a word or a sub part of a word.

300 words == 400 tokens

number of tokens is loosely about number of words.

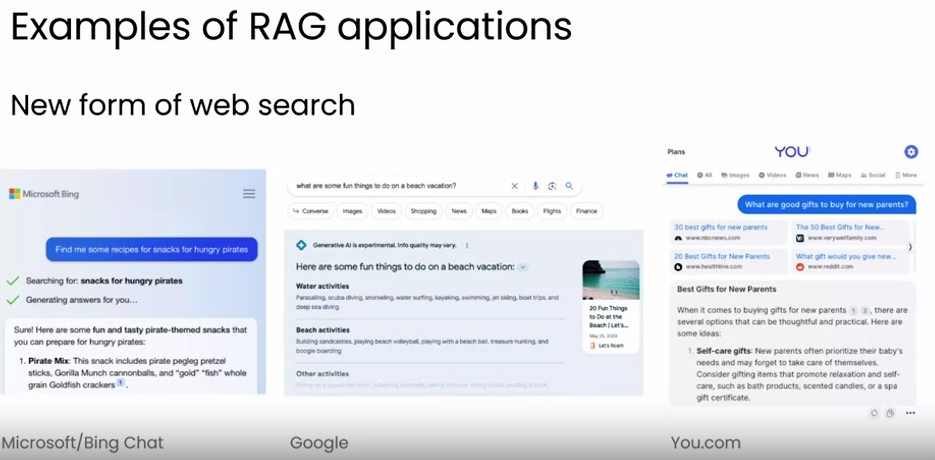

Retrieval Augment Generation (RAG)

RAG will refer to additional inputs from your company to answer the question above.

This is called Retrieval Augmented Generation or RAG, because we're going to generate an answer to this, but we're going to augment how we generate text by retrieving the relevant context or the relevant information and augmenting the prompt with that additional text.

> AI Bots that allow you to chat with PDFs

> AIs that allow you to get answers for questions on website's articles

> New form of search on web

LLM as a Reasoning Engine

Pre-training and Fine Tuning AI

> To carry out a task that is difficult to define in a prompt.

No comments:

Post a Comment