https://productschool.teachable.com/courses/2493899/lectures/52890708

- All Product Managers need to be AI Product Managers.

Modules

- Overview of AI: Embark on a journey through AI, beginning with core concepts before diving into the fascinating world of generative AI and large language models (LLMs).

- Build Trust in AI Products: UX Best Practices: Explore crafting AI-native user experiences that focus on fostering trust in your AI-powered products and delve into UX best practices to ensure you’re retaining loyal, happy customers.

- Create an AI User Flow: Learn how to apply the UX best practices and insights to design intuitive AI user flows, ensuring your creations are not only functional but also user-centric.

- How to Measure LLM-Powered Products: Human Evaluation: Master the measurement and optimization of your LLM to enhance its performance through the implementation of human evaluations.

This micro-certification is all about empowering you to use your new skills to drive AI-native user experiences that do just that: build trust, provide real value, and meet user needs.

How is an AI Product Manager different ?

- AI Product Management is not simply integrating a chat interface into a software module.

- You need to ensure that the AI products you have built meets the user needs and drives real value.

- You need to ensure that you build user trust to retain loyal customers in the process.

Overview of AI

- AI is a broad concept referring to machines or systems that mimic human intelligence.

- Includes reasoning, learning, problem solving, perception, language, understanding and more.

- AI aims to create machines capable of performing tasks that would typically require human intelligence.

Types of AI - AI Landscape

- Natural Language Processing: is concerned with the interaction between computers and human language, in which it aims to enable machines to understand, interpret, and generate human like text and speech.

- Neural Networks: are computational models inspired by the structure and function of the human brain. They consist of interconnected nodes (or neurons) organized into layers to process information and perform tasks, such as pattern recognition.

- Deep Learning: is a subset of machine learning that involves neural networks with multiple layers (AKA Deep Neural Network), which allow model to learn hierarchical representations and patterns from data.

- Computer Vision: focused on enabling machines to interpret and understand visual information from the world. Involves image recognition, object detection, and scene understanding.

- Robotics: is the intersection of AI and Hardware, and computer science. Deals with design, construction, operation, and use of robots that can interact with the physical world.

- Machine Learning: focused on developing algorithms that enable computers to learn patterns and make predictions or decisions without explicit programming.

- Generative AI is a type of AI that can generate new content, such as text, images, music, or even code, on the fly. Generative AI leverages various AI technologies, including Deep Learning and Natural Language Processing (NLP) to generate outputs that are brand new; not just replicas of the input data. Generative AI models allow people to use natural language as a way to interact with, instruct, and train them. This ability to interact in natural language makes them powerful in their delivery of product experiences for their end users.

- There are many models that fall under the "Generative" umbrella. such as LLMs (Generative Pre-trained Transformers - GPTs) for text, images, videos, GANs [Generative Adversarial Networks ], and diffusion models for images.

- These models allow people to use natural language to instruct and interact with them, making them a dynamic way to power product experiences for end users.

GENERATIVE AIs existed long before ChatGPT

- Its beginnings go back to 2014 when the first Generative Adversarial Networks (GANs) were introduced as a breakthrough in improving the quality of machine-generated images. GANs were a breakthrough because the quality of these images was unprecedented.

- In 2017, Google published a research paper called “Attention is All You Need,” which introduced the Transformer model. This was a new way of training a machine-learning model with huge amounts of data. This training set the standard for the LLMs we use today.

- From there, Google’s Transformer model quickly became the go-to-architecture for a wide range of NLP tasks and set new standards for machine translation, text generation, summarization, and more. Being able to process multiple things at once in a way that machines could scale helped put the "large" in LLM.

- In 2018, OpenAI released its first GPT-1 and Google created BERT, its own version of an LLM. Both of these LLMs leveraged the Transformer model that Google had introduced back in 2017.

- Finally, at the end of 2022, after years of testing and iterating on various versions of their GPTs, OpenAI released ChatGPT, an end-user product which was powered by their GPT-3.5, and it spread like wildfire, launching the AI wave.

What is it about AGI that makes it special?

Generative AI's programming is non-deterministic.

Deterministic vs. Non-deterministic Systems

Deterministic systems: the software that is developed today (without AI) is deterministic, meaning the output is predictable, consistent, and will always deliver the same output based on the specific input it's been given. It follows a simple process: it takes user input, processes it through code, and reliably produces a completely predictable output every time.

Non-deterministic systems: These systems do not always produce the same output for a given input, introducing an element of randomness or variability in their responses. It follows a different process: the output may vary unpredictably due to factors beyond the control of the code, offering multiple, potential outcomes.

AI-native UX

UI/UX will be significantly different with AI around. Checkout the Google Gemini interface below.

For non-deterministic systems, the content is going to be different every time.

- AI opens up a whole new way to personalize information by creating newer interfaces on the fly offering new interactions which the users might not even know they needed.

- Below is an experimental feature by Google Gemini

Multimodal reasoning

In the context of artificial intelligence and cognitive science, "modal" and "modalities" refer to different types or modes of sensory information or input that humans and AI systems can perceive and process. These modalities typically include:

- Visual

- Auditory

- Textual

- Etc.

The term "multimodal" here refers to the integration and reasoning with information from multiple modalities simultaneously. For example, in understanding a scene, an AI system might need to reason with both visual information from an image and textual information from accompanying descriptions or captions.

- Understand Users' intent

- Generate bespoke user interfaces that go beyond chat interfaces. ["Bespoke" is an adjective that describes something that is custom-made or tailored specifically to an individual's preferences, needs, or requirements.]

- After getting the context through posing a string of questions, Gemini replies by creating a bespoke interface to explore ideas.

- It is visuall rich, lots of ideas, and is interactive (user can hover over each idea to explore further).

- None of this was coded. It was generated by Gemini on the fly.

- Gemini goes thru a series of reasoning steps going from broad decisions increasingly higer resolution of reasoning finally getting to code and data.

- 0 - Classification (It considers - does it even need a UI?), can text prompt solve?

- 1 - Clarification: okay this is a complex request where lots of information needs to be presented in a complex way.

- 2 - Clarification: do i know enough to help, else ask more questions. (what are the users' daugther's interest, what kind of party he wants, etc. for which Gemini prompted quesetions)

- 3 - Prd - Gemini writes the product requirement document. It deals with the kind of functionality the experience will have (UI will have) - shows different party themes, activities and food options.

- 4 - Layout selection: based on the PRD, Gemini designs the best UI for the user's journey - list of options, list detailed layout, etc.

- 5 - Template: based on the above, Gemini writes the flutter code to compose the widgets and write any functionality that is needed.

- 5 - DataModel: finally it generates and retrievs any data needed to render the experience. Filling in content and images for the various sections.

Further prompt to show cake toppers now shows a different UI with options to choose animals (drop down), and choose the material type as well in a drop down.

AI-native UX in Detail

AI-native designing of User Experience is building the design UX ground up using AI capabilities

This means your primary goal, when building great UX for your AI-powered feature, is to build user trust via:

Making things efficient, clear, adaptable, inclusive.

Bad UX vs Good UX

HOW TO BUILD USER CONFIDENCE - UX BEST PRACTICES

- Trust & verify: You are giving sources to the content your AI is generating. This gives trust. This is basically you are creating an EXPLAINABLE AI. Makes models transparent. Below are examples - Duet AI and Google Gemini V2.

- Fail Gracefully: plan for things not going according to the plan. Be transparent and allow for retries or offer helpful solutions to you users.

Example is mitigating model crashes. If 1 model fails, ability to route the request to another model, generate the output. If for example OpenAI is down, route the request to Cohere or Gemini.

- Guided Onboarding: Onboard users so they don't feel frustrated or experience any steep learning curve. Put effort into new user onboarding experience, guiding them in the right way.

Another example is STARTER PROMPT CHATGPT

- Guided Prompting: Building good prompts is a lot of work. Guided prompting helps novice users to ensure they are being guided in their Prompting to set up user input. Guide and control the user exp - where they can have creativity, where they have more structed data and what their options are.

- Provide Value: you need to ensure that if your content fails to generate or generate something that the user did not want, you are still able to deliver some type of value to the user.

Example if the user has asked to generate a specific photo (teacher teaching a child for example), you could still search for your stock image repository and share some of them along with the ai images created.

Intent is knowing about these pitfalls, and mindfully designing the UX to deliver value to customer.

Another example - Notion AI's QnA function

- Also pops up good old Key word searches, "try again"

- Check for assumptions: these may appear in text, voice or images. In the example below, using the keyword inclusive representation helped the model to include all the races. Work with your model to avoid inaccuracies, false assumptions about your users, etc.

Architecting user flows

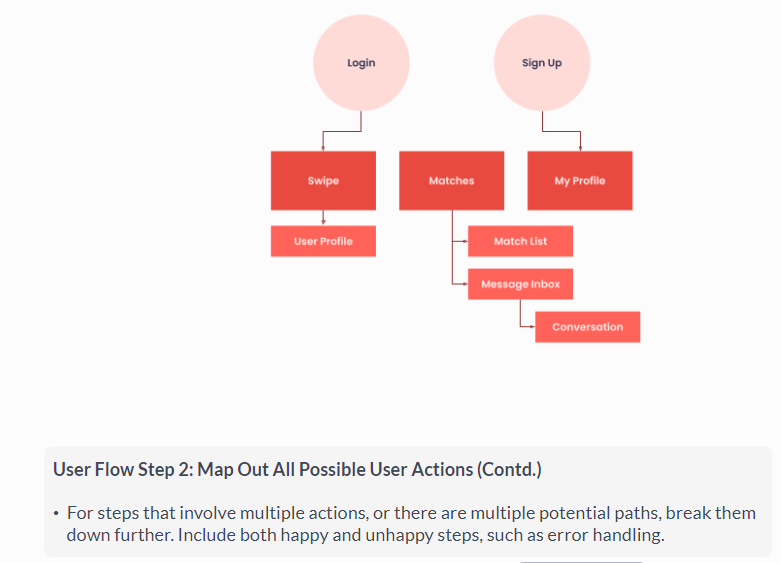

User flows are visual representations of a user's journey through a product. In other words, it's the visual path a user takes to achieve a specific goal or job-to-be-done within your product. This path is represented via a series of steps or actions a user takes.

- Creating user flows helps understand and optimize the user experience.

- Traditional user flows include entry points, decisions and actions, and final goals or objectives.

when it comes time to architect user flows for AI-powered products, additional documentation is necessary; especially ones that use LLMs

For AI-powered products, pay special attention to data inputs, prompts, and failing gracefully.

- For AI-native UX, understand how data will flow thru the product.

- Highlight where data inputs are, where data outputs are

- What are the success and failed states

- Provide value

- Fail gracefully.

How to create an AI User Flow / AI Systems diagram

- Create a user flow diagram of how data will flow thru the product, where is content generated, and the sucess and failed states.

Measuring LLM Product success

- Human evaluation: assess quality of outputs by humans reviewers rate based on predefined criteria.

- Automated assessment: Use algorithms or tools to systematically evaluate outputs against specific performance metrics.

- User feedback: Gather direct inputs from users.

Why we need different kinds of measurement?

- Output is different each time.

- Generated outputs can be unpredictable.

- Evals - human evaluation.

- Is the most common.

- But is time-consuming and expensive.

- Recommended do this yourself (Product Owner/manager)

- Can outsource or hire specialized staff.

Assessment methodology / Evaluation Rubric

- Value proposition: define the value propositions you want your product to provide to users.

- Evaluation criteria: define the specific evaluation criteria that can assess the delivery of your value propositions.

- Scalable questions: Use yes/no questions or 1-5 scale rating questions for human reviwers.

- Calibrate reviews: Create prototypical assessments so all reviewers are trained on the same expectations.

- Continually validate: Have reviewers justify their ratings to continuously calibrate.

Context: Google was building a human-in-the-loop personal assistant prodcut that combined humans and AI to fulfill user tasks.

Goal: They needed to assess the quality of task fulfillment to understand how the product was delivering value.

Method: The rubric consisted of 26 questions and included: simple criteria, specific desired outcomes, and subjective evaluations.

Training: The rubric was calibrated internally between Product, UX, and UXR, and then the team of reviewers was trained on the rubric to learn how to assess conversations.

Assessment: After each conversation, the transcript was sent to a human reviewer to manually evaluate.

Outcome: The data was used to identify opportunities to improve the product experience

Example questions

=============

Remember - questions need to be scalable, and focused on simple detection YES/NO, specific outcomes.

Another example - Jasper - Brand Voice (check it on their website)

No comments:

Post a Comment