Scraps from various sources and my own writings on Digital, Artificial Intelligence, Disruption, Agile, Scrum, Kanban, Scaled Agile, XP, TDD, FDD, DevOps, Design Thinking, etc.

Page Hits

Friday, December 11, 2009

Remote Desktop Services / Terminal Services

Source: Wikipedia...

Wednesday, October 07, 2009

Create sample database

Creating a sample database

For information about configuring vendor databases, see the IBM Rational ClearQuest and ClearQuest MultiSite Installation and Upgrade Guide.

- Start the Maintenance Tool. Then click Schema Repository > Create.

- In the Existing Connections pane, enter a name for the schema repository connection in the highlighted item and press Enter.

By default, the first connection name is 7.0.0. You can rename the connection later.

- In the Schema Repository Properties area, select a database vendor and enter the required properties. The properties for each database are different.

- If you select Microsoft SQL Server, Oracle DBMS, or IBM DB2, enter the physical database name of one of the empty databases that you created with the vendor database tools.

When you create a DB2 schema repository, the User Name property must be a user identity that has database administrator authority for the DB2 database.

- If you select Microsoft Access, enter a physical database name; the Maintenance Tool creates a new database with that name.

- If you select Microsoft SQL Server, Oracle DBMS, or IBM DB2, enter the physical database name of one of the empty databases that you created with the vendor database tools.

- Click Next.

- In the ClearQuest Data Code Page window, select the data code page to use with the new schema repository. You can choose any supported data code page supported by Rational ClearQuest. The default data code page is the one associated with the user-interface language for the operating system on which the Maintenance Tool is running, if that code page is supported. If that code page is not supported, the default data code page is ASCII.

- Click Create sample database.

Schema Repository

Creating a schema repository

- Start the Maintenance Tool. Then click Schema Repository > Create.

- In the Existing Connections pane, enter a name for the schema repository connection in the highlighted item and press Enter.

By default, the first connection name is 7.0.0. You can rename the connection later.

- In the Schema Repository Properties area, select a database vendor and enter the required properties. The properties for each database are different.

- If you select Microsoft SQL Server, Oracle DBMS, or IBM DB2, enter the physical database name of one of the empty databases that you created with the vendor database tools.

When you create a DB2 schema repository, the User Name property must be a user identity that has database administrator authority for the DB2 database.

- If you select Microsoft Access, enter a physical database name; the Maintenance Tool creates a new database with that name.

- If you select Microsoft SQL Server, Oracle DBMS, or IBM DB2, enter the physical database name of one of the empty databases that you created with the vendor database tools.

- Click Next.

- In the ClearQuest Data Code Page window, select the data code page to use with the new schema repository. You can choose any supported data code page supported by Rational ClearQuest. The default data code page is the one associated with the user-interface language for the operating system on which the Maintenance Tool is running, if that code page is supported. If that code page is not supported, the default data code page is ASCII.

- You have the option of creating a sample user database.

- Click Finish.

Shemas and Schema Repository

Schemas and schema repositories

A Rational ClearQuest schema is a complete description of the process model for all the components of a user database. This includes a description of states and actions of the model, the structure of the data that can be stored about the individual component, hook code or scripts that can be used to implement business rules, and the forms and reports used to view and input information about the component. Rational ClearQuest provides out of the box-schemas that can be customized for a client installation.

A schema is a pattern or blueprint for Rational ClearQuest user databases. When you create a user database to hold records, the database follows the blueprint defined in a schema. However, a schema is not a database itself; it does not hold any records about change requests, and it does not change when users add or modify records in the user database.

Rational ClearQuest stores schemas in the schema repository. The schema repository is the master database that contains metadata about the user databases. It does not contain user data.

A schema repository can store multiple schemas, for example, one schema for defect change requests and another schema for feature enhancement change requests. [Source:IBM]

Tuesday, August 25, 2009

Choose www or no www

Introduction

When you write a URL for your website, it can be written with or without "www," like this:

§ http://www.webhostingtalk.com/wiki

§ http://webhostingtalk.com/wiki

Should you include "www" with your URL?

The same and not the same

With most websites, the URLs with and without "www" in them will point to the same site. However, you can specify each URL (with and without "www") to point to a different IP address by modifying your DNS A record.

Some search engines see the above two URLs as two different URLs. Each URL can have a different PageRank, and by using both, you're diluting the PR for each URL.

For PR and SEO purposes, it's preferable to choose one version or the other to use. There are reasons for each choice. Which one you choose depends mostly on personal preference.

Reasons to include www

- It helps identify a URL as a web address, especially if the domain extension is other than a .com one.

- Many people will type it in anyway.

- For some people, URLs look more visually appealing with them.

Reasons not to include www

§ It makes the URL longer, especially if the URL is for a subdomain.

§ It's unnecessary.

§ For some people, URLs look cleaner without them.

How to redirect from one to the other using .htaccess

If your site is on an Apache server, you can choose to have only the version with or without "www" appear. One will direct to the other.

Add one of the following to your .htaccess file after replacing "example.com" with your domain. The "RewriteEngine on" first line activates mod_rewrite.

To redirect to the URL with www

RewriteEngine On

rewriteCond %{HTTP_HOST} ^example.com [NC]

rewriteRule ^(.*)$ http://www.example.com/$1 [R=301,L]

To redirect to the URL without www

RewriteEngine on

RewriteCond %{HTTP_HOST} !^$

RewriteCond %{HTTP_HOST} ^www.(.+)$ [NC]

RewriteRule ^/(.*) http://%1/$1 [L,R=301]

Saturday, June 20, 2009

IBM Rational Jazz...

Over the years, developing software has been compared to many familiar activities - an art, a science, even a manufacturing process. What all of these comparisons miss is the social dimension: software is best developed by a team of people working together, reacting and responding to each other in order to achieve the best outcome. Jazz is an IBM initiative to help make software delivery teams more effective. Inspired by the artists who transformed musical expression, Jazz is an initiative to transform software delivery making it more collaborative, productive and transparent.

The Jazz initiative is composed of three elements:

- An architecture for lifecycle integration

- A portfolio of products designed to put the team first

- A community of stakeholders

An architecture for lifecycle integration

Jazz products embody an innovative approach to integration based on open, flexible services and Internet architecture. Unlike the monolithic, closed products of the past, Jazz is an open platform designed to support any industry participant who wants to improve the software lifecycle and break down walls between tools.

The Jazz integration architecture is designed to give organizations the flexibility to assemble their ideal software delivery environment, using preferred tools and vendors. More than that, it allows them to do so with the flexibility to evolve their environment as their needs change, to move at their own pace, and not to be hindered by the traditional brittle and restricted integrations associated with traditional tools. The Jazz Integration Architecture defines a common set of Jazz Foundation Services that can be leveraged by any Jazz tool, and explains the rules of the road for accessing and utilizing Jazz services. It also incorporates specifications defined by the Open Services for Lifecycle Collaboration project, an independent, multi-vendor effort to define a set of protocols for sharing information across multiple tools and vendors.

A portfolio of products designed to put the team first

The Jazz portfolio consists of a common platform and a set of tools that enable all of the members of the extended development team to collaborate more easily. This reflects our central insight that the center of software development is neither the individual nor the process, but the collaboration of the team. Our newest Jazz offerings are:

Rational Team Concert

A collaborative work environment for developers, architects and project managers with work item, source control, build management, and iteration planning support. It supports any process and includes agile planning templates for Scrum and the Eclipse Way.

Rational Quality Manager

A web-based test management environment for decision makers and quality professionals. It provides a customizable solution for test planning, workflow control, tracking and reporting capable of quantifying the impact of project decisions on business objectives.

Rational Requirements Composer

A requirements definition solution that includes visual, easy-to-use elicitation and definition capabilities. Requirements Composer enables the capture and refinement of business needs into unambiguous requirements that drive improved quality, speed, and alignment.

A community of stakeholders

Jazz is not only the traditional software development community of practitioners helping practitioners. It is also customers and community influencing the direction of products through direct, early, and continuous conversation. We are doing much of our development on jazz.net, out in the open.

Once you join, you can communicate with the development teams, track the progress of builds and milestones, give us direct feedback on what is working and what is not, and submit and track defect and enhancement requests. You also have full visibility to our detailed plans, status, and progress. At the core of these benefits, you can experience using our product Web interfaces and see us using our products to develop our products. The benefit of this transparency is that it allows you and other customers to become part of a continuous feedback loop that drives development decisions. By providing your feedback early and often, you can understand and influence release direction and priorities before these decisions are locked down.

Objectives

Our goal is to provide a frictionless work environment that helps teams collaborate, innovate, and create great software. To that end, we are focusing on driving fundamental improvements in team collaboration, automation, and reporting across the software lifecycle.

Collaboration

Traditionally, software development has been tooled as if it were a tug-of-war between the productivity of individuals and the automation of processes. Business stakeholders were lucky if they got any consideration at all between major "reviews" and "handoffs". Jazz tools reflect the insight that the center of software development is neither the individual nor the process, but the collaboration within the team. It also recognizes that the team extends beyond the core practitioners to include everybody with a stake in the success of an initiative. A goal of the Jazz initiative is to enable transparency of teams and projects for continuous, context-sensitive collaboration that can:

- Promote break-through innovation

- Build team cohesion

- Leverage talent across and beyond the enterprise

Automation

Our research shows that nearly all organizations want to reduce bureaucratic roadblocks to development by automating tedious and error-prone tasks and burdensome data entry. Yet they also need to maintain or improve process consistency and governance, and increase insight into real project progress. A goal of the Jazz initiative is to automate processes, workflows and tasks so that organizations can adopt more lean development principles at the pace that makes sense for them. The Jazz initiative endeavors to:

- Improve the support and enforcement of any process, including agile processes

- Reduce tedious and time-consuming manual tasks

- Capture information on progress, events, decisions and approvals without additional data entry

Reporting

Getting fast access to fact-based information is essential to any choreographed work effort. But all too often, software development status is gathered through a tedious, manual reporting effort that is out of date by the time it is collected, correlated, and delivered. The Jazz initiative is focused on delivering real-time insight into programs, projects and resource utilization to help teams:

- Identify and resolve problems earlier in the software lifecycle

- Get fact-based metrics -- not estimates -- to improve decision making

- Leverage metrics for continuous individual and team capability improvement

Wednesday, June 10, 2009

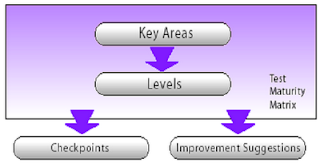

Test Process Improvement

Is a tried and tested structured assessment of an organization’s testing maturity with a view to improving its overall testing and QA effectiveness and efficiency.

The TPI has been developed by Sogeti, a wholly-owned subsidiary of the international Capgemini organization. It goes hand in hand with the Test Management Approach (TMap) which is also a testing methodology from Sogeti.

The TPI methodology is an approach of evaluating the current state of the QA and testing processes in the organization according to 20 key dimensions (Key Areas). It provides a quick, well structured status report to the existing maturity of the test processes in the organizations as well as a detailed road-map to the steps needed to take in order to increase the maturity and quality of the testing process.

This model has four basic components -

- Key Areas

- Levels

- Checkpoints

- Improvement Suggestions

Key Areas

Life Cycle

Test strategy

Life-cycle model

Moment of involvement

Techniques

Estimating and planning

Test specification techniques

Static test techniques

Metrics

Infrastructure

Test automation

Test environment

Office environment

Organization

Commitment and motivation

Test functions and training

Scope of methodology

Communication

Reporting

Defect management

Test-ware management

Test process management

All Cornerstones

Evaluation

Low level testing

Levels

Each of the above key areas is assessed at various levels like A, B, C and D.

The number of levels for all the key areas is not the same. For e.g. Static Testing Techniques’ key area has only two levels – A and B. However, ‘Test Strategy’ has 4 levels – A, B, C and D.

Checkpoints

Each level has certain checkpoints for each of the key areas.

The test process under assessment should satisfy these checkpoints to be certified for that level.

Improvement Suggestions

The model also includes improvement suggestions to assist the organizations in achieving higher levels of maturity.

Tuesday, June 09, 2009

Monday, May 11, 2009

Defect Removal Efficiency...

A measure of the number of defects discovered in an activity versus the number that could have been found. Often used as a measure of testing effectiveness.

Defect Removal Efficiency (DRE) is a measure of the efficacy of your SQA activities.. For eg. If the DRE is low during analysis and design, it means you should spend time improving the way you conduct formal technical reviews.

DRE = E / ( E + D )

Where E = No. of Errors found before delivery of the software and D = No. of Errors found after delivery of the software.

DRE = 320 / [320+30]*100 = 0.91*100 = 91%

Friday, March 06, 2009

Friday, February 13, 2009

Performance Testing...

The Performance testing team determines the appropriate strategy for testing applications. One or all the below mentioned strategy will be implemented depending upon the available time & budget. Actual estimation will be given at the time of detailed Master performance Test planning session.

1. Load testing

2. Stress testing

3. Capacity testing

4. Soak testing

1.Load testing is used to test an application against a requested number of users. The objective is to determine whether the site can sustain this requested number of users with acceptable response times.

2.Stress testing, is load testing over extended periods of time to validate an application’s stability and reliability.

3.Capacity testing is used to determine the maximum number of concurrent users an application can manage.

4.Soak Tests, is be to confirm the stability of the development solution, application anomalies, identified potential memory leaks or similar resource issues, and validated that the response times and KPIs are not compromised over an extended period of approx, 75% peak system load.

1.1 Understanding of the system architecture

The Performance testing team will gain a solid understanding of the system architecture, including:

•Defining the types of routers used in the network setup

•Determining whether multiple servers are being used

•Establishing whether load balancers are used as part of the IP networks to manage the servers

•Finding out which servers are configured into the system (Web, application, database)

Finally the Performance testing team will determine

•Sufficient number of load generators or test machines to run the appropriate number of virtual users.

•Determine that has testing tool has multithreading capabilities and can maximize the number of virtual users being run. Ultimately, the goal is to minimize system resource consumption while maximizing the virtual user count.

1.2 Creating Virtual User Scripts

•The Performance testing team will use a script recorder used to capture all the business processes into test scripts by identifying and recording all the various business processes from start to finish.

•Defining these transactions will assist in the breakdown of all actions and the time it takes to measure the performance of a business process.

1.3 Defining User Behavior

•The Performance testing team will study and finalize the run-time settings that define the way that the script runs in order to accurately emulate real users.

•The Performance testing team will also decide approach for system response times that can vary because they are dependent on connection speed, and all users connect to the Web system at different speeds (e.g., modem, LAN/WAN) over PPP at varying modem speeds (e.g., 28.8 Kbps, 56.6 Kbps, etc.)

•The Performance testing team will also put a mechanism for error handling during the execution.

1.4 Creating a Load Test Scenario

•The Performance testing team will define individual groups based on common user transactions.

•They will define and distribute the total number of virtual users.

•The Performance testing team will determine which load generating machines the virtual users will run on.

•The Performance testing team will specify how the scenario will run.

•Staggered or parallel formation.

1.5 Running the Load Test Scenario and Monitoring the Performance

•The Performance testing team will do a real-time monitoring to view the application’s performance at any time during the test.

•Every component of the system will be monitored depending upon the monitors and tool capacity for early detection of performance bottlenecks during test execution

Network

Web server

Application server

Database

All server hardware

1.6 Analyzing Results

•The performance testing team will collect and process the data (series of graphs and reports) to resolve performance bottlenecks.

•This will be used to determine the maximum number of concurrent users until response times become unacceptable.

•Depending upon the agreed scope and time & budget constraint following typical performance related testing at various phases can be carried out.

Performance Testing - Pictorial Representation

2. Performance Requirement Analysis

The first step in Web site load test is to measure as accurately as possible the current load levels.

2.1 Measuring Current Load Levels (Average, Peak, Concurrent)

The best way to capture the nature of Web site load is to identify and track, a set of key user session variables that are applicable and relevant to Web site traffic.

Some of the variables that could be tracked include:

•The length of the session (measured in pages)

•The duration of the session (measured in minutes and seconds)

•The type of pages that were visited during the session (e.g., home page, product information page, credit card information page etc.)

•The typical/most popular ‘flow’ or path through the web site

•The % type of users (new user vs. returning registered user)

Measure how many people visit the site per week/month or day. Then break down these current traffic patterns into one-hour time slices and identify

•The peak-hours

•The numbers of users during peak hours

•The average number of users

•The number of concurrent users

2.2 Estimating Target Load Levels

Once the current load levels are identified, the next step is to understand as accurately and as objectively as possible the nature of the load that must be generated during the testing.

Using the current usage figures, estimate

•how many people will visit the site per week/month or day

•divide that number to attain realistic peak-hour scenarios

There are four key variables that must be understood in order to estimate target load levels:

•how the overall amount of traffic to Web site is expected to grow

•the peak load level which might occur within the overall traffic

•how quickly the number of users might ramp up to that peak load level

•how long that peak load level is expected to last

Once an estimate of overall traffic growth is ready, the peak level that might be expected within that overall volume needs to be estimated.

2.3 Estimating Test Duration

The length of the user session can be used for determining the load test duration. The Web site that may deal very well with a peak level for five or ten minutes may crumble if that same load level is sustained longer than that. It is necessary to identify

•the duration of the average user

•the duration of the peak

One should also take into account the fact that users will hit the web site at different times, and that during peak hour the number of concurrent users will likely gradually build up to reach the peak number of users. Identify the rate at which the number of users builds up

•the "Ramp-up Rate" should be factored into the load test scenarios

2.4 Scenario Identification

Identify the scenarios from the information gathered during the analysis of the current traffic:

•the scenarios that are to be used

•various user roles

•% of users associated with the scenarios

The identified scenarios aim to accurately emulate the behavior of real users navigating through the Web site.

3. Performance Scripting and Execution

Once the performance requirements are completely and correctly identified, the next step is scenario designing, scripting and execution.

3.1 Performance Test Design

The scenarios should be created in such a way that they simulate real life Loads as closely as possible.

The key elements of a load test design are:

•test objective

•pass/fail criteria

•Scenarios

Load Test Objective: The objective of this load test is to determine if the Web site, as currently configured, will be able to handle the X number of sessions/hr peak load level anticipated. If the system fails to scale as anticipated, the results will be analyzed to identify the bottlenecks.

Pass/Fail Criteria:The load test will be considered a success if the Web site will handle the target load of X number of sessions/hr while maintaining the pre-defined average page response times (if applicable). The page response time will be measured and will represent the elapsed time between a page request and the time the last byte is received.

Scenarios:To simulate a real life load, it is utmost important to assign real life ratio of virtual users to the scenarios, add rendezvous points at appropriate places to study server behavior.

3.2 Script Preparation

Since in most cases the user sessions follow just a few navigation patterns, it may not be required to script hundreds of individual scripts to achieve realism—if carefully chosen, a dozen of scripts will take care of most Web sites.

3.3 Script Execution

Scripts should be combined to describe a load testing scenario. A basic scenario includes:

•the scripts that will be executed

•the percentages in which those scripts will be executed

•description of how the load will be ramped up

It is recommended to start with a test at 50% of the expected virtual user capacity for 15 minutes and a medium ramp rate. The CPU, memory, network usage are required to be monitored.

After making any system adjustments run the test again or proceed to 75% of expected load. Continue with the testing and proceed to 100%; then up to 150% of the expected load, while monitoring and making the necessary adjustments to system as you go along.

4. Performance Result Analysis and Reporting

Upon execution of performance testing, the results are analyzed using various graphs and reports are generated.

4.1 System Performance Monitoring

It is vital during the execution phase to monitor all aspects of the web site. This includes measuring and monitoring the CPU usage and performance aspects of the various components of the web site – i.e. not just the web server, but the database and other parts as well (such as firewalls, load balancing tools etc.)

Therefore, it is necessary to use (install if necessary) performance monitoring tools to check each aspect of the web site architecture during the execution phase.

4.2 Results Analysis

The following results are highlighted and presented in the report:

•Page response time by load level

•Page views and page hits by load level

•Throughput

•Completed and abandoned session by load level

•HTTP and network errors by load level

•Concurrent user by minute

•Full detailed report which includes response time by page and by transaction, analysis and recommendations

5. Project Deliverables

•Performance Test Plan

oCovers the test entry criteria, exit criteria, schedule, pass/fail criteria

•Test Scripts

oVUGen Scripts generated during recording of user actions

•List of Transactions

oA detailed listing of the transactions that will be defined during recording of user actions. The tool generates performance reports for these transactions

•Test Report

oCovers the summary of findings and results of tests/ recommendations

•Executive summary

oFor recommendations

Apart from these following are some of the typical project deliverables that can be provided after the execution and analysis phase of this engagement. These deliverable files are performance testing tool and application under test dependent & may not be exactly producible.

A detailed analysis report based on the below mentioned graphs

This is a generic graph showing performance under load. This graph is useful in pinpointing bottlenecks. For example, if a tester wants to inquire about the user threshold at 2 seconds, the results above show a maximum of 7,500 concurrent users.

This graph is a generic display of the number of transactions that passed or failed. In the above example,

If the goal is to obtain a 90-percent passing rate for the number of transactions, then transaction 2 fails.

Approximately 33 percent out of 100 transactions failed.

Following are typical performance graphs that will be delivering as one for the deliverables.

Percentile: Analyzes percentage of transactions that were performed within a given time range.

Performance under load: Indicates transaction times relative to the number of virtual users running at any given point during the scenario.

Transaction performance: Displays the average time taken to perform transactions during each second of the scenario run.

Transaction performance summary: Displays the minimum, maximum and average performance times for all the transactions in the scenario.

Transaction performance by virtual user: Displays the time taken by an individual virtual user to perform transactions during the scenario.

Transaction distribution: Displays the distribution of the time taken to perform a transaction

This performance graph displays the number of transactions that passed, failed, aborted or ended with errors. For example, these results show the “Submit Search” business process passed all its transactions at a rate of approximately 96 percent.

This performance graph displays the minimum, average and maximum response times for all the transactions in the load test. This graph is useful in comparing the individual transaction response times in order to pinpoint where most of the bottlenecks of a business process are occurring. For example, the results of this graph show that “FAQ” business process has an average transaction response time of

1.779 seconds. This would be an acceptable statistic in comparison to the other processes.

Connections per second: Shows the number of connections made to the Web server by virtual users during each second of the scenario run.

Throughput: Shows the amount of throughput on the server during each second of the scenario run.

Following typical Web graphs can be delivered as a part of deliverables

Connections per second: Shows the number of connections made to the Web server by virtual users during each second of the scenario run.

Throughput: Shows the amount of throughput on the server during each second of the scenario run.

This Web graph displays the number of hits made on the Web server by Vusers during each second of the load test. This graph helps testers evaluate the amount of load Vusers generate in terms of the number of hits. For instance, the results provided in this graph indicate an average of 2,200 hits per second against the Web server.

This Web graph displays the amount of throughput (in bytes) on the Web server during the load test.

This graph helps testers evaluate the amount of load Vusers generate in terms of server throughput. For

Example, this graph reveals a total throughput of over 7 million bytes per second.

Regression Testing...

1. REGRESSION TESTING – PROCESS 3

1.1 PERFORM IMPACT ANALYSIS 3

1.2 ESTABLISH TEST OBJECTIVES 3

1.3 CREATE REGRESSION TEST PLAN 3

1.4 IDENTIFY/CREATE REGRESSION TEST CASES 3

1.5 ENSURE REGRESSION TESTING ENVIRONMENT 4

1.6 EXECUTE REGRESSION TESTING 4

1.7 REVIEW REGRESSION TEST RESULTS 4

1.8 PREPARE TEST REPORT 4

1.9 EXIT CRITERIA 4

1.10 MEASUREMENTS 5

2. TOOLS FOR REGRESSION TESTING 5

3. METRICS FOR REGRESSION TESTING 5

1. Regression Testing – Process

A. Perform Impact Analysis

B. Establish Test Objectives

C. Create Regression Test Plan

D. Identify/Create Regression Test Cases

E. Ensure Regression Testing Environment

F. Execute Regression Testing

G. Review Regression Test Results

H. Prepare Test Report

I. Exit Criteria

J. Measurements

1.1 Perform Impact Analysis

The Team shall understand new requirements and analyze the impact of the added functionality to the existing features. Based on the analysis the impacted functionalities and new functionalities shall be identified for testing.

1.2 Establish Test Objectives

The Team shall Study and review each new requirement and Identify “key" System functions that need to be tested. Test objectives for each function shall be specified, Automation scope and tool shall be identified.

1.3 Create Regression Test Plan

The TL shall prepare the Regression Test Plan based on the new functionality as a result of the enhancements and the impacted areas. Scope shall be specified (the features shall be tested), Test Criteria gives the Entry and Exit criteria shall be defined. The resources requirements shall be identified. Responsibilities to the resources shall be assigned. Metrics to be captured shall be identified.

1.4 Identify/Create Regression Test Cases

The Test Designer shall identify a representative sample / subset of test cases to test impacted functionalities and prepare test cases for new functional scenarios.

1.5 Ensure Regression Testing Environment

The TL shall ensure the required test environment for Regression testing as identified in RTP. It is recommended that this setup is separate from the development environment. The smoke test shall be executed to ascertain if the build is stable and it can be considered for further testing.

1.6 Execute Regression Testing

Regression Test Cases shall be executed by the Tester manually and/or automated based on Project Requirements. All Regression Test runs shall be recorded in the Regression Test Cases/Test Report. If the observed behavior matches with the expected behavior, then “P” (Pass) shall be entered in the observed behavior column; otherwise “F” (Fail) shall be entered.

For every run, observed behavior shall be recorded. Test runs shall be repeated until all the defects are closed. All defects shall be recorded by the concerned tester. Defect Reporting and Closing shall be done using Defect tracking Tool.

1.7 Review Regression Test Results

The Regression Test results shall be reviewed by the TL. The TL may assign the task of review within the team. Reviewer may take some sample test cases and conduct testing to verify the correctness. The reviewer shall verify the following:

• All the relevant test cases are executed

• The choice of test cases have covered the main scenarios

• The testing is as per the corresponding test plan

• Identified defects are captured and analyzed

1.8 Prepare Test Report

The TL shall prepare the Test Summary Report after evaluation. Metrics specified in the test plan shall be prepared and analyzed.

Test Summary Report shall cover Test Execution and Defect Data summary. Based on the completion criteria, testing shall be signed off by the Test Manager.

1.9 Exit Criteria

The regression testing is completed when all the criteria mentioned in the agreement is met.

The basis on which regression testing can considered to be finished are

• When all the testing cycles are covered

• When the enhancements/changes are found to be met

A proper Signoff document should be prepared.

1.10 Measurements

Use the following metrics of Regression Testing to know effectiveness of this procedure:

• Defect Slippage

• Defect removal Efficiency

• Effort Estimation Variance

• Schedule Estimation Variance

• Productivity

• Defect Detection Rate

2. Tools for Regression Testing

The following tools are recommended for automated Regression.

• Mercury WinRunner

• Rational Robot

• Mercury Quick Test Professional

• Silk Test

3. Metrics for Regression Testing

Capture the following metrics for Regression Testing.

• Phase wise Defect removal efficiency and Total defect removal efficiency

• Failure rate/ Test accuracy/ Defect density

• Completeness/ Test coverage/ Defect indices

• Run reliability/ Error distribution/ Fault-days number

• Requirements traceability/ Cause and effect graphing

• No. of conflicting requirements/ No. of entries and exits/ module

• Review Effectiveness/ Delivered Defect Density

• Testing Effectiveness/ Testing Efficiency Test Case Design Effectiveness

• Phase wise Defect Density and Cumulative defect density

• Cost of Poor Quality/ Cost of Quality

SQL Essential Training - LinkedIn

Datum - piece of information Data is plural of datum. Data are piece of information - text, images or video. Database - collection of data. ...

-

Definition ITIL is a " framework of best practice approaches intended to facilitate the delivery of high quality IT services" . It...

-

High Maturity in CMMI for Development - Part 1 Reference: http://www.connect2hcb.com/tiki-index.php The high maturity concept in CMMI ...